During the weekends I decided to take a look at what ML.NET can propose in the area of recommendation engine.

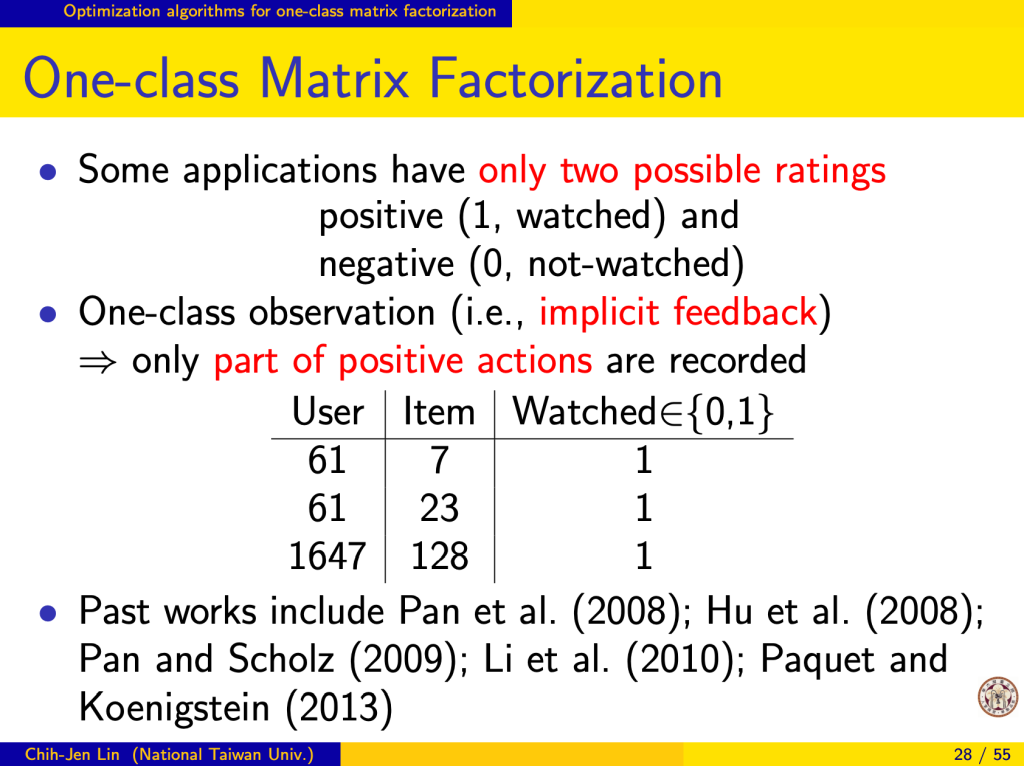

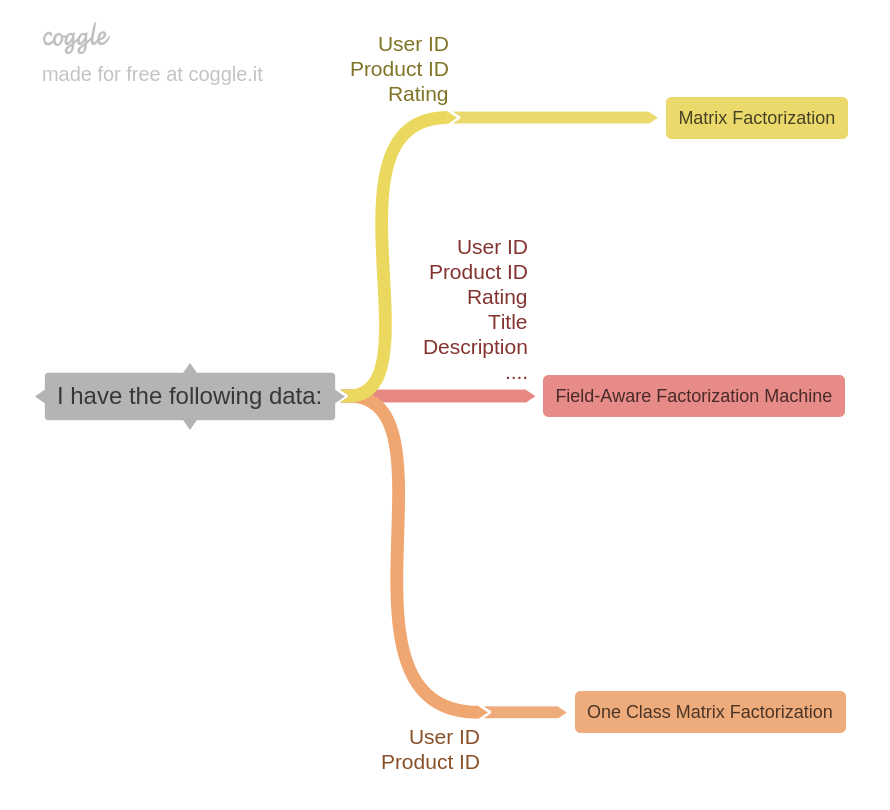

I found a nice picture in Mark Farragher’s blog post that explains three available options:

The choice depends on what information you have.

- If you have sophisticated user feedback like rating (or likes and most importantly dislikes) then we can use Matrix Factorization algorithm to estimate unknown ratings.

- If we have not only rating but other product fields, we can use more advanced algorithm called “Field-Aware Factorization Machine”

- If we have no rating at all then “One Class Matrix Factorization” is the only option for us.

In this post I would like to focus on the last option.

One-Class Matrix Factorization

This algorithm can be used when data is limited. For example:

- Books store: We have history of purchases (list of pairs userId + bookId) without user’s feedback and want to recommend new books for existing users.

- Amazon store: We have history of co-purchases (list of pairs productId + productId) and want to recommend products in section “Customers Who Bought This Item Also Bought”.

- Social network: We have information about user friendship (list of pairs userId + userId) and want to recommend users in section “People You May Know”.

As you already understood, it is applicable for a pair of 2 categorical variables, not only for userId + productId pairs.

Google showed several relevant posts about the usage of ML.NET One Class Matrix Factorizarion:

- Official MS “Product Recommendation – Matrix Factorization problem sample“

- Build A Product Recommender Using C# and ML.NET Machine Learning by Mark Farragher

- Build a Recommendation engine with ML.NET and F# by Riccardo Terrell

After reading all these 3 samples I realised that I do not fully understand what is Label column is used for. Later I came to a conclusion that all three samples most likely are incorrect and here is why.

Mathematical details

Let’s take a look at excellent documentation of MatrixFactorizationTrainer class. The first gem is

There are three input columns required, one for matrix row indexes, one for matrix column indexes, and one for values (i.e., labels) in matrix. They together define a matrix in COO format. The type for label column is a vector of Single (float) while the other two columns are key type scalar.

COO stores a list of (row, column, value) tuples. Ideally, the entries are sorted first by row index and then by column index, to improve random access times. This is another format that is good for incremental matrix construction

So anyway we need three columns. If in the classic Matrix Factorization the Label column is the rating, then for One-Class Matrix Factorization we need to fill it with something else.

The second gem is

The coordinate descent method included is specifically for one-class matrix factorization where all observed ratings are positive signals (that is, all rating values are 1). Notice that the only way to invoke one-class matrix factorization is to assign one-class squared loss to loss function when calling MatrixFactorization(Options). See Page 6 and Page 28 here for a brief introduction to standard matrix factorization and one-class matrix factorization. The default setting induces standard matrix factorization. The underlying library used in ML.NET matrix factorization can be found on a Github repository.

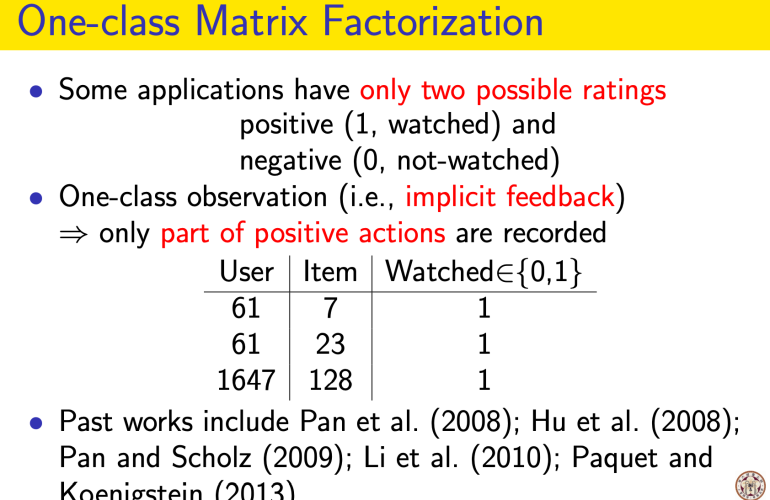

Here is Page 28 from references presentation:

As you see, Label is expected to be always 1, because we watched only One Class (positive rating): user downloaded a book, user purchased 2 items together, there is a friendship between two users.

In the case when data set does not provide rating to us, it is our responsibility to provide 1s to MatrixFactorizationTrainer and specify MatrixFactorizationTrainer.LossFunctionType as loss function.

Here you can find fixes for samples:

Hello Sergey, good article, I appreciate the explanation about the “Label” column that no one in the examples you mention could explain clearly.

I have a dataset with 1,150,000 pairs of prodID and prodIDCopurchase to get “Customers Who Bought This Item Also Bought” but I still can’t get the model to work correctly. According to your article, what I do is add an additional column to my data called “label”, and assign a “1” to each record. Example:

Label ProductID ArtIdCoPurchase

1 42051 43262

1 43599 43262

1 43179 43262

1 34641 38041

1 39508 38041…

When running the model I am assigning “label” in column 0, MatrixColumnIndexColumnName in column 1 and MatrixRowIndexColumnName in column 2.

But the result is frustrating. I have calculated by database the highest combination for a prodID and CoPurchaseID that is repeated 1700 times, but the model predicts a lower score for than other combinations (1,009 vs 1,020 for others).

The metrics I get are:

Mean Absolute Error: 0.05526126081233132

Mean Squared Error: 0.014548097591333041

Root Mean Squared Error: 0.12061549482273429

RSquared: NaN

What am I doing wrong? Is it correct to assign a “Label” column to my data with the value “1”?

Thank you!

Hi, I think that Matrix Factorization may not be the best algorithm for your use case.

One thing that you may try… What if you provide a normalized matrix as an input? Only one row for each pair (ProductID, ArtIdCoPurchase) and float label in the [0,1] range.

if 1700 is the max CoPurchase in your dataset, then this pair will receive label 1. For 1009 purchases label will be 1009/1700=0,5935294118 and so on.

Thanks for your answer.

If we put a normalized Matrix with a single row for ProductId and ProdCoPurchaseId and the label is only [0,1], the information on how many times the product was purchased together with another would be discarded. And if we make a query to the database grouping the number of times and ordering, why do I need Machine Learning?

I’ve proposed to use float labels in the range from 0 to 1. Only few labels will be close to 1 (the most co-purchased pairs).

You basically provide sparse martix as an input for the model. Model restore values that you do not know from the data (“probability” of co-purchaise for pair that you have not provided)

You you provide

A B 0.95

B A 0.95

B C 0.83

C B 0.83

it is a sparse 3×3 matrix

? 0.95 ?

0.95 ? 0.83

? 0.83 ?

factorisation process restore all ? in the matrix

and there should be quite a high chance for a big value for (A,C) pair

p.s. for the co-purcase task also worth trying to prove 1s for the main diagonal as well

A A 1

B B 1

C C 1

because you have the same meaning for columns and row (product co-purchased with itself)

aha, so you say that I do my own calculation of the label (“score”) and take that calculated value to between 0 and 1.

First I group my data to obtain the “score” according to the maximum amount, for example if the products [A B] is repeated 1700 times (and this is the maximum), then my data would be:

A B 1

while if I have [A C] 500 times that data would be

A C 500/1700 (0.294)

So the prediction [A B] could return 1 and [A C] would return 0.294 while the ML could predict other combinations that I haven’t loaded into my data.

The problem is: this prediction would not be “people who bought this product also bought”, because what I need to show is the “best [n] combinations” for a product that the user is viewing. For that, once I calculated the “score” it would be a simple query for the Product “Order By Score DESC” (take[n]). I see that I don’t need a “prediction” for this, so I still don’t understand why in the Matrix Factorization examples they insist that it can be applied to “people who bought this product also bought it”.

What is the difference between “people who bought this product also bought” and “best [n] combinations”?

If you have more than [n] co-purchases for most of your products you really can do “Order By Score DESC” (take[n]). But what will you do for new products that do not yet have enough purchase history?

ML can help you in such cases. You also can compare ML results and “Order By Score DESC” (take[n]) on the most popular products to score the model precision.

P.S. I believe that is important to provide the symmetric matrix for this task

– for “A B x” add “B A x”

– add “A A 1” for all producst