- Home

- Azure

- Microsoft Developer Community Blog

- Meshing with LinkerD2 using gRPC-enabled .NET Core services

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Microservices architectures do certainly serve a noble cause: gain in agility, deploy faster, scoped scale-out/down, etc. but they also come with numerous challenges not encountered with monoliths. Some typical drawbacks are: increased complexity due to the growing number of services, extra latency due to the distribution tax, harder to get a good oversight, complex patterns to alleviate network saturation, higher security risks, etc. The list of drawbacks is a least as big as the list of benefits, but each problem has a solution! To illustrate these problems and some potential solutions, I created the grpc-calculator demo app and the equivalent REST-based in .NET Core. The GitHub repos contain a step-by-step walkthrough on how to get these calculators up and running in your own K8s. This blog post is more focused on a high-level understanding of a Service Mesh & gPRC.

Why a calculator?

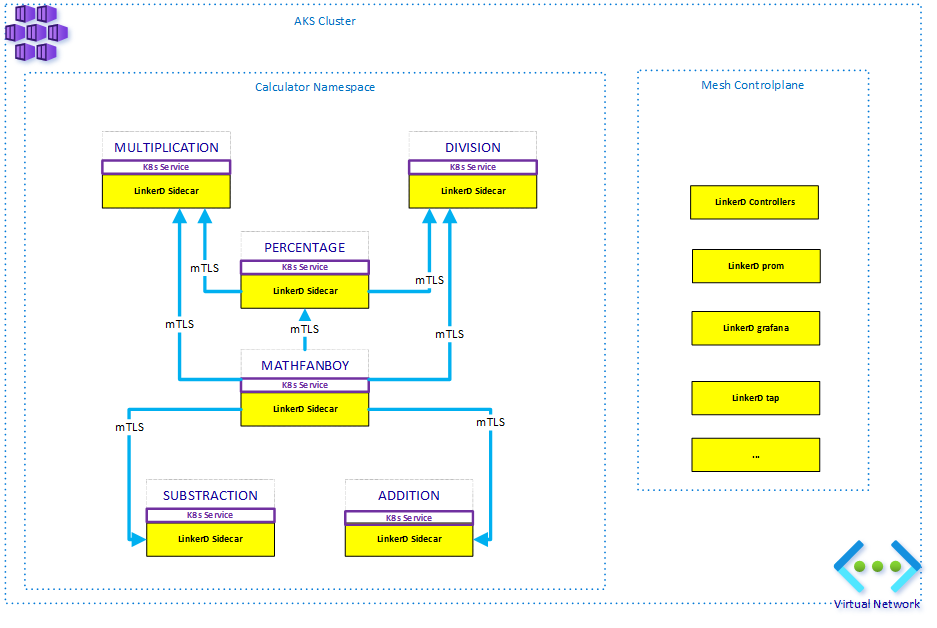

I thought a calculator could be an easy representation of a Microservices Architecture. We all know what a calculator is and each mathematical function could be seen as independent and sometimes reusing other functions. So, I implemented 5 different services: addition, multiplication, division, subtraction and percentage. The latter calls both multiplication and division. This, to increase the level of chatting between services. On top of these 5 services, I created the MathFanBoy that randomly calls (every 20ms) each operation and sometimes causes division by 0 exceptions, in order to see the Mesh in action. Here is a representation of the calculator app:

Should I follow the same pattern for extra mathematical operations, I'd inevitably end up with a substantial number of services that could call each other, which would make it harder to monitor and keep a good level of performance.

Why gRPC?

For those who don't know what gRPC is all about, I invite you to read Google documentation about it https://grpc.io/. As stated before, latency is one of the limiting factors of Microservices Architectures. gRPC leverages HTTP/2 to keep TCP connections alive and benefit from features such as multiplexing, meaning multiple requests being handled by the same TCP connection. This can also be achieved with REST over HTTP/2 but gRPC also has a better (de)serialization process based on Protocol Buffers aka protobuf. What is key to remember is that running a Microservices Architecture over HTTP/1 will soon or later lead to an increased latency as the number of services grows, so it's not future proof. Not convinced? Just look at the below figures after 1 minute of age or so, and yet with very few roundtrips between services:

PS C:\_dev\aks> linkerd stat pod -n rest-calculator NAME STATUS MESHED SUCCESS RPS LATENCY_P50 LATENCY_P95 LATENCY_P99 TCP_CONN

addition-6dfc57f8c-5wnwn Running 1/1 100.00% 29.6rps 1ms 1ms 6ms 8

division-774c85f7cb-5j2pb Running 1/1 90.30% 59.8rps 1ms 2ms 7ms 12

mathfanboy-84d66c67b9-6xwrf Running 1/1 - - - - - -

mathfanboy-84d66c67b9-b6ksq Running 1/1 - - - - - -

mathfanboy-84d66c67b9-cpmml Running 1/1 - - - - - -

mathfanboy-84d66c67b9-lvl6h Running 1/1 - - - - - -

mathfanboy-84d66c67b9-q62m7 Running 1/1 - - - - - -

multiplication-84d5b4d884-88vrl Running 1/1 100.00% 60.7rps 1ms 1ms 7ms 11

percentage-6bbcdcc979-lvsk2 Running 1/1 100.00% 30.4rps 8ms 25ms 48ms 9

substraction-555db9956b-gc7f8 Running 1/1 100.00% 30.4rps 1ms 1ms 6ms 8

and this for the gRPC implementation:

addition-c879ffc75-b987k Running 1/1 100.00% 36.3rps 1ms 2ms 6ms 6

division-58c76bcd4-4mlrb Running 1/1 87.75% 70.2rps 1ms 2ms 7ms 8

mathfanboy-754c949488-2s788 Running 1/1 - - - - - -

mathfanboy-754c949488-4gbv4 Running 1/1 - - - - - -

mathfanboy-754c949488-b2rkx Running 1/1 - - - - - -

mathfanboy-754c949488-ghq99 Running 1/1 - - - - - -

mathfanboy-754c949488-lx48b Running 1/1 - - - - - -

multiplication-6fd5b8ccb4-kldhq Running 1/1 100.00% 71.8rps 1ms 1ms 5ms 8

percentage-d5cf7d8c4-gzlqc Running 1/1 100.00% 35.7rps 4ms 15ms 19ms 6

substraction-85f64f7c76-g52mw Running 1/1 100.00% 37.0rps 1ms 2ms 8ms 6

Looking at the RPS column, we clearly see that the gRPC implementation can handle much more requests.

Why using a Mesh?

While gRPC or REST over HTTP/2 addresses the latency aspect to some extent, it is very hard to get a complete oversight of a medium-sized application based on Microservices principles. It is also not guaranteed that communication between services will be standardized in terms of security. Moreover, when introducing HTTP/2, another big problem appears: most load balancers are TCP-level ones (layer 4) and know nothing about the protocols higher in the stack (HTTP, gRPC, ...), making them unable to properly load balance HTTP/2 connections. While multiplexing helps handling multiple requests over a single TCP connection, it keeps talking to the same backend. The below diagram shows what happens with an out of the box K8s setup, in case of high load:

If MathFanBoy starts to hammer the percentage operation, its associated Horizontal Pod Autoscaler would start scaling it out to add new instances of the service because the single instance would start showing higher resource consumption. However, since MathFanBoy already has its long-lived TCP Connection to that single instance, it will not leverage the extra instances and will exhaust the single backend. Only new instances of MathFanBoy would start talking to newer instances of percentage.

Of course, with HTTP/1, this is not a problem because each request is a separate TCP connection and the K8s service will automatically load balance it to the extra instances. With HTTP/2, we need a smarter approach and that's what a Service Mesh such as LinkerD does. They basically analyze the traffic going over the wire and bypass the multiplexing feature of HTTP/2 to inject their own logic and create a new TCP connection whenever needed. Moreover, they also load balance requests based on the backend(s) latency not as a mere Round Robin.

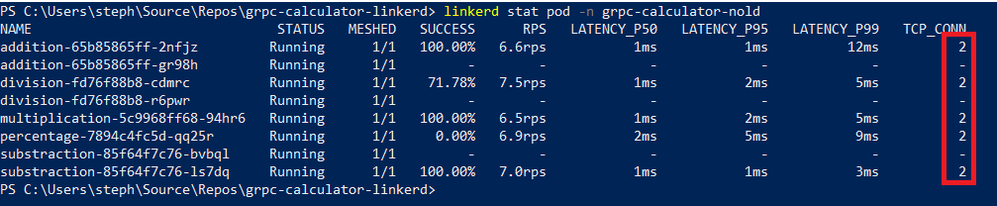

Here again, the gRPC repo ships with two yaml files, 1 that injects LinkerD everywhere, including the MathFanBoy traffic generator and another one that leaves MathFanBoy out, meaning that the LinkerD proxy cannot play its role. As stated before, we clearly see that K8s does indeed not load balance the load properly:

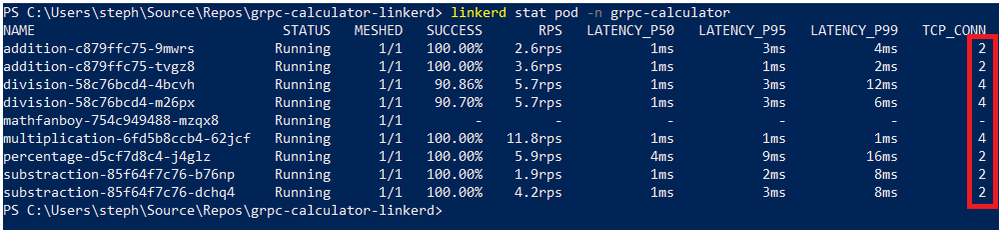

because I have two addition, two division and two substraction pods but only one of them is being addressed by the MathFanBoy (out of the picture since not monitored by LinkerD). Now, the exact same setup with MathFanBoy injected shows:

an even distribution across all running pods, although a single MathFanBoy instance is running. This smart load balancing enables a proper scale out story.

By the way, did you notice the success rate of the division operation (first column)? It's not 100% because of our random division by zero exceptions. LinkerD's dashboard makes it even easier to chase by showing the complete flow:

talking about the dashboard, here is Linkerd's visual representation of my first diagram, based on live traffic analysis:

Very nice! Every metric is also sent to Prometheus & default Grafana dashboards.

With regards to security, LinkerD2 upgrades HTTP/gRPC to mTLS, meaning that we get both encryption and mutual authentication, and certificates are rotated every 24 hours. In .NET Core, the default gRPC client and server implementations rely on TLS. When using a Mesh such as LinkerD, it is important not to enforce TLS in the backend because it causes the Mesh not being able to inspect the traffic anymore, so it's better to delegate this task to the Mesh. Therefore, you have to explicitly instruct the .NET Core client about "insecure" communication using this piece of code:

AppContext.SetSwitch("System.Net.Http.SocketsHttpHandler.Http2UnencryptedSupport", true);

AppContext.SetSwitch("System.Net.Http.SocketsHttpHandler.Http2Support", true);

Of course, shouldn't you be using a Mesh, you'd better stick to the defaults.

Wrap up!

When truly embracing Microservices, it is very important to anticipate their extra bits of complexity and rely on Service Meshes such as LinkerD or Istio. Thanks to the grpc-calculator & rest-calculator apps, you can easily test that in your own K8s, compare HTTP/1 vs HTTP/2, with or without a mesh, all based on .NET Core. In this post, I focused mostly about in-cluster traffic. I'll explain later on how to bring this to ingress controllers and what limitations/compromises we might be facing.

What I like with LinkerD is its simplicity. It has less features than Istio but you can get started right away and that's what the core contributors intent to do: make something simple, fast and reliable!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.